A fundamentális mátrix számításához a két képen potenciális AKAZE kulcspont-párokat keresünk a korábban megismert módon.

A korábbi síkhomográfiát feltételező RANSAC algoritmus helyett az epipoláris geometriai kényszert alkalmazzuk a pontpárok szűrésére. Az inlier pontok alapján megtörténik a fundamentális mátrix meghatározása is.

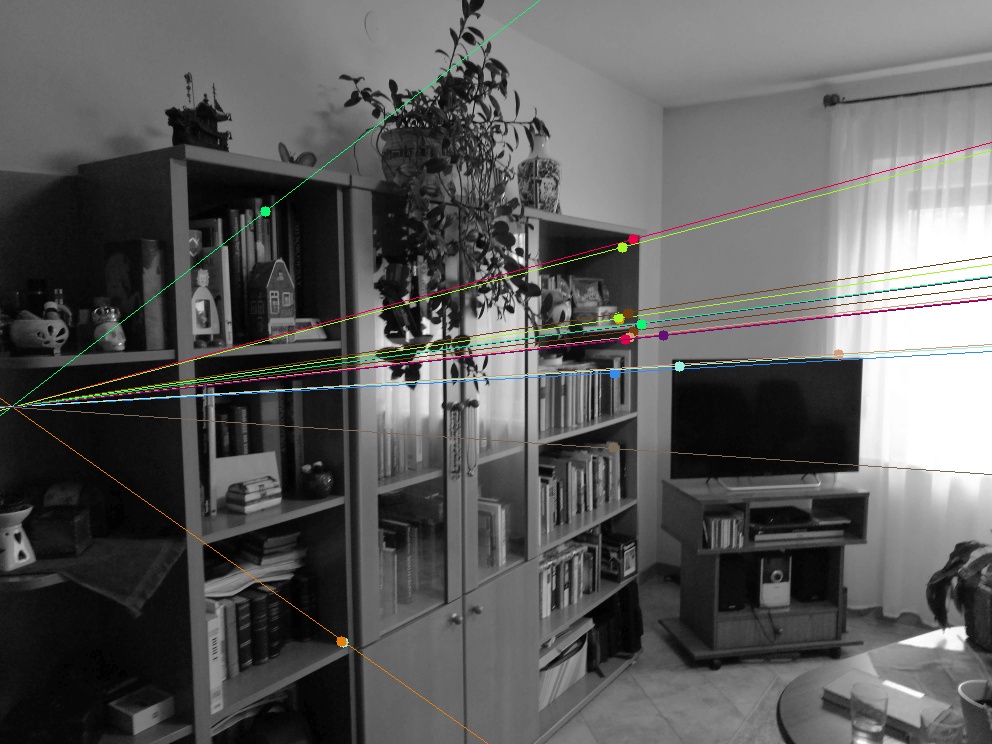

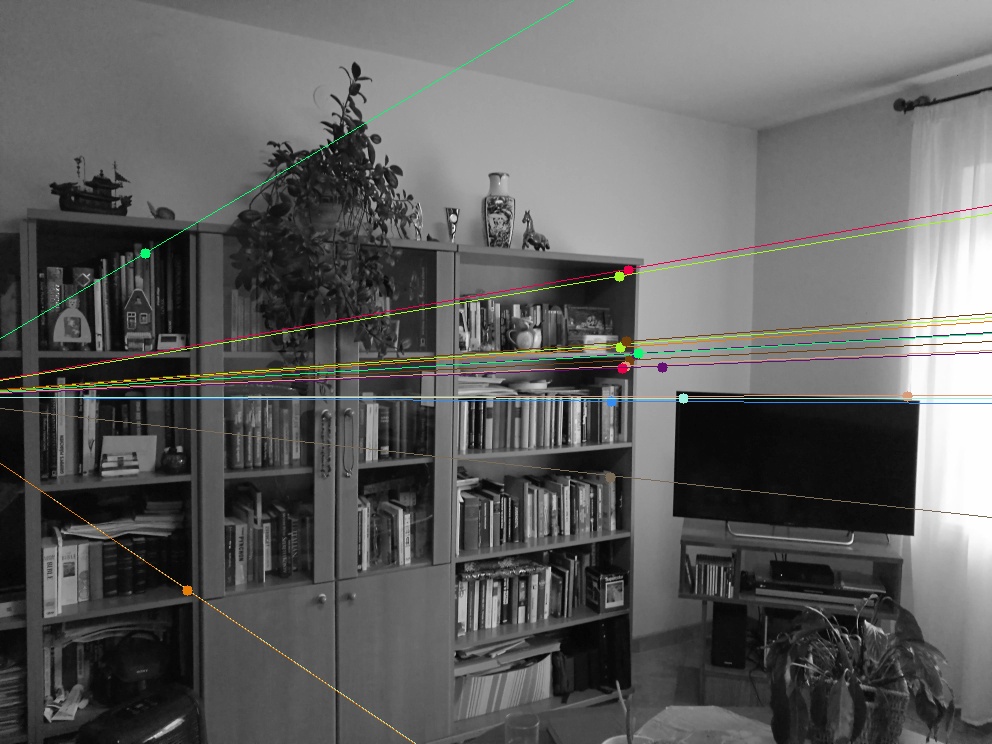

Meghatározzuk és kirajzoljuk a kulcspontokat és a párjukhoz tartozó epipoláris egyeneseket. Jól látható, hogy az ugyanazon színnel ábrázolt pontokon mennek át az egyenesek.

#

# Based on

# https://opencv-python-tutroals.readthedocs.io/en/latest/py_tutorials/py_calib3d/py_epipolar_geometry/py_epipolar_geometry.html

#

import cv2

import numpy as np

MIN_MATCH_COUNT: int = 10

img1Name = 'room_scene1.jpg'

img2Name = 'room_scene2.jpg'

def drawlines(img1, img2, lines, pts1, pts2):

''' img1 - image on which we draw the epilines for the points in img2

lines - corresponding epilines '''

r, c = img1.shape

img1 = cv2.cvtColor(img1, cv2.COLOR_GRAY2BGR)

img2 = cv2.cvtColor(img2, cv2.COLOR_GRAY2BGR)

cidx = 0

for r, pt1, pt2 in zip(lines, pts1, pts2):

# color = tuple(np.random.randint(0, 255, 3).tolist())

color = colors[cidx % 10]

cidx = cidx + 1

x0, y0 = map(int, [0, -r[2]/r[1]])

x1, y1 = map(int, [c, -(r[2]+r[0]*c)/r[1]])

img1 = cv2.line(img1, (x0, y0), (x1, y1), color, 1)

img1 = cv2.circle(img1, tuple(pt1), 5, color, -1)

img2 = cv2.circle(img2, tuple(pt2), 5, color, -1)

return img1, img2

np.random.seed(10)

colors = []

for i in range(0, 10):

colors.append(tuple(np.random.randint(0, 255, 3).tolist()))

img1 = cv2.imread(img1Name, 0) # baseImage

img2 = cv2.imread(img2Name, 0) # queryImage

img2_gr_bgr = cv2.merge((img2, img2, img2))

# Initiate detector

kpextract = cv2.AKAZE_create()

# kpextract = cv2.ORB_create()

# Find the keypoints and descriptors

kp1, des1 = kpextract.detectAndCompute(img1, None)

kp2, des2 = kpextract.detectAndCompute(img2, None)

print('Keypoints in img1:', len(kp1))

print('Keypoints in img2:', len(kp2))

img1kp1 = cv2.drawKeypoints(img1, kp1, None, (255, 0, 0), 4)

img2kp2 = cv2.drawKeypoints(img2, kp2, None, (255, 0, 0), 4)

# Matching based on descriptor similarity

# HAMMING should be used for binary descriptors (ORB, AKAZE, ...)

# NORM_L2 (Euclidean distance) can be used for non-binary descriptors (SIFT, SURF, ...)

matcher = cv2.BFMatcher(cv2.NORM_HAMMING)

matches = matcher.knnMatch(des1, des2, 2)

# Number of matches will be the number of keypoints in img1

print('Matches found: ', len(matches))

# Store all the good matches using Lowe's ratio test.

good_match = []

match_ratio = 0.7

pts1 = []

pts2 = []

for m, n in matches:

if m.distance < match_ratio * n.distance:

good_match.append(m)

pts2.append(kp2[m.trainIdx].pt)

pts1.append(kp1[m.queryIdx].pt)

print("Potential good matches found:", len(good_match))

# Computing correspondences

if len(good_match) < MIN_MATCH_COUNT:

print("Not enough matches are found - %d/%d" % (len(good_match), MIN_MATCH_COUNT))

exit(1)

pts1 = np.int32(pts1)

pts2 = np.int32(pts2)

F, mask = cv2.findFundamentalMat(pts1, pts2, cv2.FM_LMEDS)

print(F)

print(np.linalg.matrix_rank(F))

# We select only inlier points

pts1 = pts1[mask.ravel() == 1]

pts2 = pts2[mask.ravel() == 1]

print('Used number of point pairs:', len(pts1))

# Find epilines corresponding to points in right image (second image) and

# drawing its lines on left image

lines1 = cv2.computeCorrespondEpilines(pts2.reshape(-1, 1, 2), 2, F)

lines1 = lines1.reshape(-1, 3)

img5, img6 = drawlines(img1, img2, lines1, pts1, pts2)

# Find epilines corresponding to points in left image (first image) and

# drawing its lines on right image

lines2 = cv2.computeCorrespondEpilines(pts1.reshape(-1, 1, 2), 1, F)

lines2 = lines2.reshape(-1, 3)

img3, img4 = drawlines(img2, img1, lines2, pts2, pts1)

cv2.imshow('ep1', img5)

cv2.imshow('ep2', img3)

cv2.waitKey(0)

cv2.destroyAllWindows()