Picire - Token-based test case reduction

Picire is the product of an active research group in Szeged, Hungary. The main focus of this group is to find effective ways to fuzz different software engines and also effectively post-process the output of the fuzzer (test case reduction). This post is about the latter task, reducing the output of the fuzzer in the least amount of time. During the last decade, several researchers added their bit to the tool (kudos!), and it has been open-sourced by the first author, Renáta Hodován (LinkedIn). Reference to the GitHub repository: renatahodovan/picire.

Picire

Picire is a Python implementation of the Delta Debugging algorithm supporting parallelization and further configuration options. It can be used either as a command line tool or as a library. Just like the original algorithm, Picire automatically reduces “interesting” tests while keeping their “interesting” behavior. A common use case is minimizing failing tests so that they still reproduce the original failure. The tool (and the algorithm) works iteratively. As a first step, it splits up the input into n chunks either by lines or characters. Then, iteratively, it inspects smaller test cases composed of these chunks whether they are still interesting. The selection of chunks can happen two ways: either a small subset of the chunks is kept (subset-based reduce), or that small subset is removed and everything else is kept (complement-based reduce). If a new interesting test case is found, it becomes the input of the next iteration. The iterations stop if removing any further chunks would make the test uninteresting (e.g. the test is 1-minimal).

If one would like to reduce text-based test cases, Picire provides good support. It can reduce its input line-by-line, or as a character sequence, or even a combined approach: the input is first reduced at line-level, then at character-level. The line-level reduction is faster, but the results might contain superfluous characters in the lines. At the other hand, character-level reduction is slower, but the results are usually smaller.

If you are interested in the research behind the tool, check the references papers in the README of the repository.

Token-based Reduction

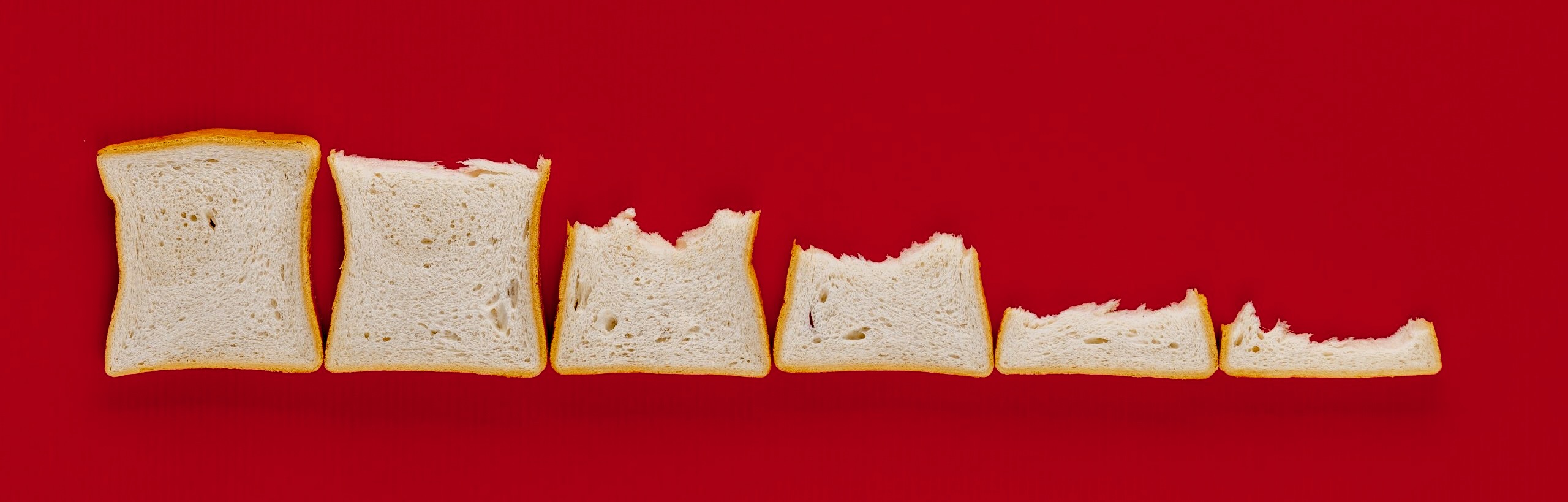

Reducing something can be done two ways.

If we know the structure of that something (a grammar for example), that could be really useful: smaller output can be found quicker.

If that’s not the case, a structure-unaware approach should be used: Picire does this way!

However, if we are dealing with rapidly developing programming languages, then the grammar might be out of date every day.

In this case, a “token-based” approach might come very handy.

Tokens are the smallest units of a program (keywords, operators, etc.), breaking them might not be clever if we are searching for a syntactically correct program that causes a crash in its execution engine.

|

|---|

| Tokens in C language (Geeks for Geeks). |

Reducing the “sumprod10” program

Suppose that we are interested in printing the product of the first 10 natural numbers to the console, and we have a program that does it, but it also has some superfluous parts. We need two things: the input that has to be reduced, and an oracle script that decides whether a reduction step is successful [or not].

// sumprod10.c

#include <stdio.h>

int add(int a, int b)

{

return a + b;

}

int mul(int a, int b)

{

return a * b;

}

void main()

{

int sum = 0;

int prod = 1;

for (int i = 1; i <= 10; i++)

{

sum = add(sum, i);

prod = mul(prod, i);

}

printf("sum: %d\n", sum);

printf("prod: %d\n", prod);

}

The program computes both the sum and the product of the first ten natural numbers in a single loop. We can say that we want to create a sub-program that does not contain statements that do not contribute to the value of prod at line 20. To achieve this goal, the oracle should look like this:

#! /bin/bash

if ! gcc -Werror=implicit-int -Wno-error=implicit-function-declaration -Werror=return-type $1 -o $1.exe >$1.gcc.log 2>&1; then

exit 1 # sources that cannot even be compiled are not interesting

fi

gtimeout 1 $1.exe | grep -q "prod: 3628800"

It returns 0 when the interesting property is found and 1 if not.

Things are clear so far, but how can we reduce it considering the boundaries of the language tokens?

Option A: Reformatting the input

If we don’t really want to invest work in it, formatting can save lots of time. For example, a file that has one token per line is an acceptable input format for the C compilers. (Of course that works if the SUT behaves similarly.) And voila, you have a “tokenized” file, line-level reduction should do the magic.

#include <stdio.h>

int

add

(

int

a

,

int

b

)

{

return

a

+

b

;

}

int

mul

(

int

a

,

int

b

)

{

return

a

*

b

;

}

void

main

(

)

{

int

sum

=

0

;

int

prod

=

1

;

for

(

int

i

=

1

;

i

<=

10

;

i++

)

{

sum

=

add

(

sum

,

i

)

;

prod

=

mul

(

prod

,

i

)

;

}

printf(

"sum: %d\n"

,

sum

)

;

printf(

"prod: %d\n"

,

prod

)

;

}

So far, Picire can be used from the command line and from its API as well. To install it, I would recommend using the GitHub repository instead of the pip package manager, since the package has not been updated for a while.

git clone git@github.com:renatahodovan/picire.git

cd picire

pip install .

$ picire --version

picire 21.8.post41+g7f237cd

If everything is written to the disk, the following command executes the reduction:

#! /bin/bash

picire \

--atom line \

--log-level ERROR \

--complement-first \

--subset-iterator skip \

--complement-iterator backward \

--cache content-hash \

--test path/to/oracle.sh \

--input path/to/sumprod10.c \

--out path/to/output/ \

--parallel \

--jobs 4

Then, the reduced file will be written to the given folder (path/to/output/sumprod10.c).

Option B: Using the API

If you are maintaining a fuzzer framework that randomly generates inputs and feed them into a SUT, this way might suit better. Picire defines a Python API that can be used.

Suppose that you have the tokenized format from the fuzzer output, the only task is to feed to the Picire:

# Import the picire module (pip install picire)

import picire

from os import environ

from pathlib import Path

from subprocess import Popen, PIPE

# Helper function to execute shell commands.

def run_command(command, cwd, env=environ):

process = Popen(command,

cwd=cwd.resolve(),

env=env,

stdout=PIPE,

stderr=PIPE)

out, err = process.communicate()

stdout = str(out, encoding='utf-8')

stderr = str(err, encoding='utf-8')

return process.returncode, stdout, stderr

# The input tokens, can be anything. We would like to reduce this list.

tokens = [

"#include <stdio.h>",

"int", "add", "(", "int", "a", ",", "int", "b", ")",

"{", "return", "a", "+", "b", ";", "}",

"int", "mul", "(", "int", "a", ",", "int", "b", ")", "{", "return", "a", "*", "b", ";", "}",

"void", "main", "(", ")", "{",

"int", "sum", "=", "0", ";",

"int", "prod", "=", "1", ";",

"for", "(", "int", "i", "=", "1", ";", "i", "<=", "10", ";", "i++", ")", "{",

"sum", "=", "add", "(", "sum", ",", "i", ")", ";",

"prod", "=", "mul", "(", "prod", ",", "i", ")", ";", "}",

"printf(", "\"sum: %d\\n\"", ",", "sum", ")", ";",

"printf(", "\"prod: %d\\n\"", ",", "prod", ")", ";", "}"

]

# print("\n".join(tokens)) # check the contents

# The oracle that decides the interestingness of a config (list).

# Should be thread safe if the reduction is done parallel.

# Should be customized for the specific task.

def oracle(config, config_id):

file_name = '_'.join(config_id)

source_file = Path(f'{file_name}.c')

binary_file = Path(f'{file_name}.exe')

# Write out the file to be able to compile with GCC

with open(source_file, 'w') as text_file:

text_file.write('\n'.join(config))

# Compilation

command = [

'gcc',

'-Werror=implicit-int',

'-Wno-error=implicit-function-declaration',

'-Werror=return-type',

str(source_file),

'-o', str(binary_file)

]

outcome = None

retcode, out, _ = run_command(command, Path.cwd())

if retcode != 0:

outcome = picire.Outcome.PASS

command = ['gtimeout', '1', f'./{str(binary_file)}']

_, out, _ = run_command(command, Path.cwd())

if 'prod: 3628800' in out:

outcome = picire.Outcome.FAIL

return outcome

# Test whether the initial configuration is interesting

# output = oracle(tokens, ('i0', 'r0', 'c1'))

class CaseTest:

def __init__(self, interesting, content):

self.content = content

self.interesting = interesting

def __call__(self, config, config_id):

return self.interesting(config, config_id)

iterator = picire.iterator.CombinedIterator(

False, # don't start with subset iterator

picire.iterator.skip, # skip reduction to subset steps

picire.iterator.backward) # reduction to complement is performed in backward syntactic order

reducer = picire.ParallelDD(CaseTest(oracle, tokens),

cache=picire.cache.ConfigCache(),

dd_star=True,

config_iterator=iterator,

proc_num=8)

output = reducer(tokens)

# print(' '.join(output))

At the end, saving and executing the script:

$ python api_test.py

The oracle function generates i*.c and i*.exe files to the current working directory for checking different configurations.

They might be deleted on the fly as well, I didn’t do that, so the reduction process can be followed manually by checking the .c files.

The reduced list is in the output variable, can be used to open a bug report 🚀